Je gère plusieurs sites web (environ 60), et pour automatiser mon travail, j’ai écrit un script pour vérifier l’expiration des certificats SSL.

La plupart des sites que je gère utilisent LetsEncrypt et sont auto-hébergés sur Linode , mais parfois le certbot renew ne fonctionne pas ou un hébergeur externe décide de renouveler le certificat 2 jours avant l’expiration.

LetsEncrypt renouvelle automatiquement le certificat s’il reste moins de 30 jours, donc ce script signale rarement un problème.

Ce script s’exécute donc quotidiennement et m’avertit si le certificat d’un hôte va bientôt expirer, afin que je puisse vérifier manuellement.

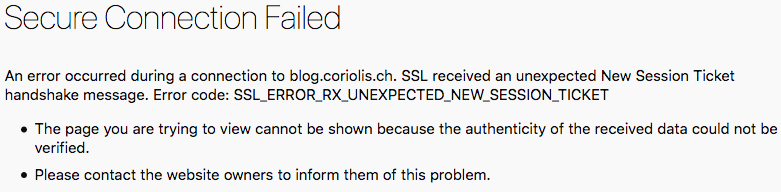

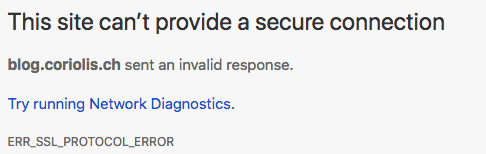

Ce script utilisait Net::SSL::ExpireDate pour vérifier la date d’expiration, mais il semble qu’il n’aime pas les certificats Cloudflare, alors j’ai ajouté une autre fonction pour obtenir la date d’expiration du certificat en utilisant openssl.

Ce script est disponible sur github :

https://gist.github.com/sburlot/9a26255cc5b7d6b703fb37d40867baec

Utilisation : entrez la liste des sites en modifiant la ligne :

my @sites = qw/coriolis.ch textfiles.com/;et lancez via crontab.

Liens utiles:

https://prefetch.net/articles/checkcertificate.html

https://www.cilogon.org/cert-expire

#!/usr/bin/perl

# vi:set ts=4 nu:

use strict;

use POSIX 'strftime';

use Net::SSL::ExpireDate;

use Date::Parse;

use Data::Dumper;

use MIME::Lite;

my $status = "";

my @sites = qw/coriolis.ch textfiles.com/;

my $error_sites = "";

my %expiration_sites;

################################################################################################

sub check_site_with_openssl($) {

my $site = shift @_;

my $expire_date = `echo | openssl s_client -servername $site -connect $site:443 2>&1 | openssl x509 -noout -enddate 2>&1`;

if ($expire_date !~ /notAfter/) {

print "Error while getting info for certificate: $site\n";

$error_sites .= "$site has no expiration date\n";

return;

}

$expire_date =~ s/notAfter=//g;

my $time = str2time($expire_date);

my $now = time;

my $days = int(($time-$now)/86400);

$expiration_sites{$site} = $days;

$status .= "$site expires in $days days\n";

print "$site expires in $days days\n";

if ($days < 25) {

$error_sites .= "$site => in $days day" . ($days > 1 ? "s":"") . "\n";

}

}

################################################################################################

sub check_site($) {

my $site = shift @_;

# we have an error for sites served via Cloudflare: record type is SSL3_AL_FATAL

# Net::SSL doesnt support SSL3??

my $ed = Net::SSL::ExpireDate->new( https => $site );

#print Dumper $ed;

if (defined $ed->expire_date) {

my $expire_date = $ed->expire_date; # return DateTime instance

my $time = str2time($expire_date);

my $now = time;

my $days = int(($time-$now)/86400);

$expiration_sites{$site} = $days;

print "$site expires in $days days\n";

if ($days < 25) {

$error_sites .= "$site => in $days day" . ($days > 1 ? "s":"") . "\n";

}

} else {

$error_sites .= "$site has no expiration date\n"; # or has another error, but I'll check manually.

}

}

################################################################################################

sub send_email($) {

my $message = shift @_;

my $msg = MIME::Lite->new(

From => 'me@website.com',

To => 'me@website.com',

Subject => 'SSL Certificates',

Data => "One or more certificates should be renewed:\n\n$message\n"

);

$msg->send;

}

################################################################################################

print strftime "%F\n", localtime;

print "="x30 . "\n";

for my $site (sort @sites) {

check_site_with_openssl($site);

}

# sort desc by expiration

foreach my $site (sort { $expiration_sites{$a} <=> $expiration_sites{$b} } keys %expiration_sites) {

$status .= "$site expires in " . $expiration_sites{$site} . " days\n" ;

}

print "="x30 . "\n";

if ($error_sites ne "") {

send_email($error_sites);

}