I manage a handful of websites (around 60), and to automate my job I wrote a script to check for the expiration of SSL certificates.

Most of the sites I manage use LetsEncrypt and are self-hosted by me on Linode, but sometimes (never?) the certbot renew doesn’t work or some external hosting company decides to renew the certificate 2 days before the expiration. Why? I don’t know.

LetsEncrypt will automatically renew the certificate if less than 30 days are remaining, so this script will rarely report a problem.

So this script runs daily and warns me if some host certificate will expire soon, so I can manually check.

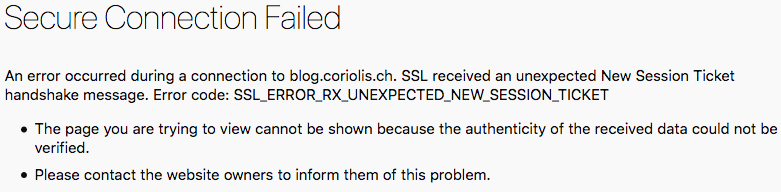

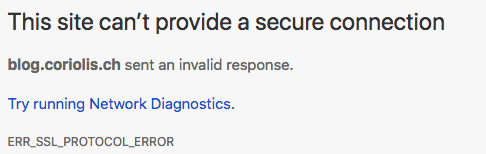

This script used Net::SSL::ExpireDate to check for expiration date, but it seems it doesn’t like Cloudflare certificates, so I added another function to get the certificate expiration date using openssl.

This script is available via github:

https://gist.github.com/sburlot/9a26255cc5b7d6b703fb37d40867baec

Usage: enter the list of sites by modifying the line:

my @sites = qw/coriolis.ch textfiles.com/;and run (via crontab, after your certbot renew cron)

Helpful links:

https://prefetch.net/articles/checkcertificate.html

https://www.cilogon.org/cert-expire

#!/usr/bin/perl

# vi:set ts=4 nu:

use strict;

use POSIX 'strftime';

use Net::SSL::ExpireDate;

use Date::Parse;

use Data::Dumper;

use MIME::Lite;

my $status = "";

my @sites = qw/coriolis.ch textfiles.com/;

my $error_sites = "";

my %expiration_sites;

################################################################################################

sub check_site_with_openssl($) {

my $site = shift @_;

my $expire_date = `echo | openssl s_client -servername $site -connect $site:443 2>&1 | openssl x509 -noout -enddate 2>&1`;

if ($expire_date !~ /notAfter/) {

print "Error while getting info for certificate: $site\n";

$error_sites .= "$site has no expiration date\n";

return;

}

$expire_date =~ s/notAfter=//g;

my $time = str2time($expire_date);

my $now = time;

my $days = int(($time-$now)/86400);

$expiration_sites{$site} = $days;

$status .= "$site expires in $days days\n";

print "$site expires in $days days\n";

if ($days < 25) {

$error_sites .= "$site => in $days day" . ($days > 1 ? "s":"") . "\n";

}

}

################################################################################################

sub check_site($) {

my $site = shift @_;

# we have an error for sites served via Cloudflare: record type is SSL3_AL_FATAL

# Net::SSL doesnt support SSL3??

my $ed = Net::SSL::ExpireDate->new( https => $site );

#print Dumper $ed;

if (defined $ed->expire_date) {

my $expire_date = $ed->expire_date; # return DateTime instance

my $time = str2time($expire_date);

my $now = time;

my $days = int(($time-$now)/86400);

$expiration_sites{$site} = $days;

print "$site expires in $days days\n";

if ($days < 25) {

$error_sites .= "$site => in $days day" . ($days > 1 ? "s":"") . "\n";

}

} else {

$error_sites .= "$site has no expiration date\n"; # or has another error, but I'll check manually.

}

}

################################################################################################

sub send_email($) {

my $message = shift @_;

my $msg = MIME::Lite->new(

From => 'me@website.com',

To => 'me@website.com',

Subject => 'SSL Certificates',

Data => "One or more certificates should be renewed:\n\n$message\n"

);

$msg->send;

}

################################################################################################

print strftime "%F\n", localtime;

print "="x30 . "\n";

for my $site (sort @sites) {

check_site_with_openssl($site);

}

# sort desc by expiration

foreach my $site (sort { $expiration_sites{$a} <=> $expiration_sites{$b} } keys %expiration_sites) {

$status .= "$site expires in " . $expiration_sites{$site} . " days\n" ;

}

print "="x30 . "\n";

if ($error_sites ne "") {

send_email($error_sites);

}